The Next AI Risk is Not the Model. It is the Data

The next big AI story will not be about chatbots writing emails. It will be about systems taking actions inside real businesses, at real speed, with real consequences.

A new global survey of 600 senior data leaders at companies with more than $500 million in revenue, published in Informatica’s “CDO Insights 2026: Data governance and the trust paradox of data and AI literacy take center stage” report, suggests that moment is closer than most organisations admit.

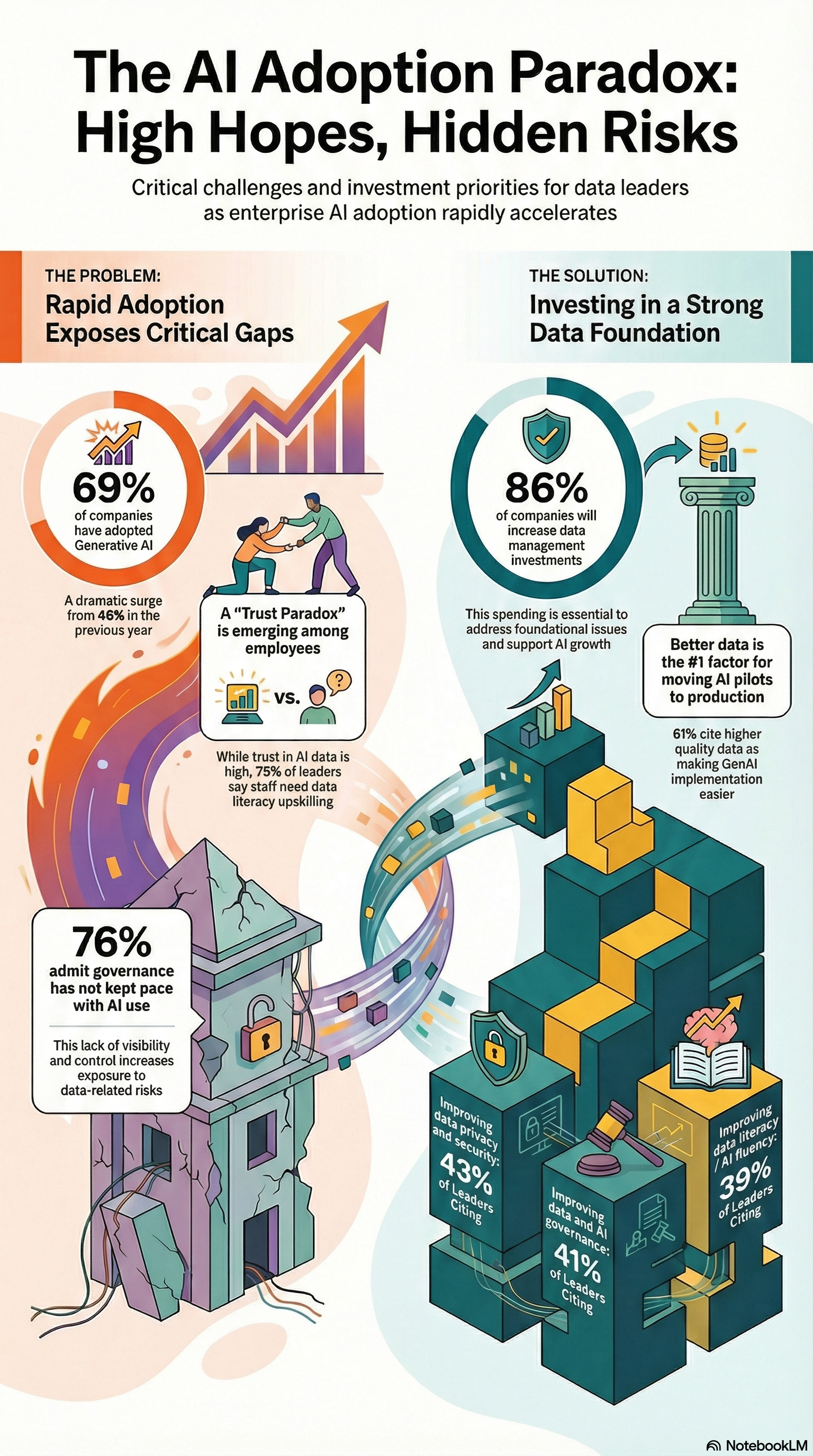

Nearly seven in ten (69%) say generative AI is already embedded into business practices, up from 48% and 45% in the prior two years. Almost half (47%) say they have already adopted agentic AI, the emerging category of systems designed to act autonomously to reach defined goals. Another 31% expect to adopt agentic AI by the end of this year.

The adoption curve looks steep. The foundations underneath it look shaky.

Adoption is accelerating. Confidence is not.

The survey’s most revealing split is between momentum and readiness.

On one side: executives and teams are moving AI from pilots into everyday workflows. On the other: the people responsible for enterprise data say the basics are still unresolved. In the press release, 91% of data leaders say data reliability remains a barrier to moving more generative AI initiatives from pilot to production. Even more telling, 90% say they are concerned that new AI pilots are progressing without resolving the data reliability issues uncovered by earlier efforts.

That is how organisations end up with “AI everywhere” and accountability nowhere.

The “trust paradox” inside the enterprise

Employees are increasingly comfortable using AI. Data leaders are increasingly nervous about what AI is being fed.

The press note describes a “trust paradox”: 65% of respondents say most or all of their organisation trusts the data being used in AI efforts, even while data leaders point to persistent quality and governance gaps.

Emilio Valdés, SVP Sales International at Informatica, spells out the risk in the press release:

“The promise of AI is immense, but so are the risks if you don’t have confidence in a reliable data foundation.”

He links the problem to skills and oversight, not just tooling:

“Our CDO Insights 2026 report reveals a ‘trust paradox’, although employees generally trust the data used for AI, many are lacking in data and AI literacy skills, and organisations lack underlying AI governance structures for achieving the responsible and ethical outcomes they desire.”

And he warns what that means in plain terms:

“This poses significant risk exposure and hurts confidence in AI initiatives.”

Agentic AI raises the stakes because it moves from answers to actions

Generative AI can mislead. Agentic AI can misfire.

Among organisations that have adopted or plan to adopt agentic AI, 50% cite data quality and retrieval concerns as a top challenge preventing AI agents from reaching production. Security concerns (43%) and lack of agentic AI expertise (42%) follow closely.

This matters because the next wave of use cases is not cosmetic. Data leaders are prioritising customer-facing and decision-heavy applications: 29% list enhancing customer experience and loyalty among their top AI categories for the next 12 months, while 28% point to improving business intelligence and decision-making, and 27% to regulatory and ESG compliance.

If the underlying data is inconsistent, the failure shows up where it hurts: customers, regulators, and revenue.

Governance is lagging behind daily use

Most organisations are not tracking AI usage tightly enough for the scale they are heading toward.

More than three quarters (76%) say their company’s visibility and governance has not fully kept pace with employees’ use of AI. At the same time, 75% say their workforce needs stronger data literacy skills, and 74% say greater AI literacy is required.

That combination is combustible: widespread use, high trust, weak oversight, and uneven literacy.

In the short term, it produces bad decisions. In the longer term, it creates the kind of incident that forces a business to slow down abruptly: regulatory scrutiny, a customer-facing failure, a security event, or an internal scandal where nobody can explain why an automated system acted the way it did.

The Middle East is moving fast. The gap will show sooner.

The Middle East is one of the most ambitious regions on AI, driven by national strategies, digital government programmes, and enterprise transformation. That speed is exactly why the survey’s warning lands harder here: fast adoption compresses the time organisations have to fix data and governance.

Yasser Shawky, Vice President, Emerging Markets (MEA) at Informatica, says the energy is real, but the bottleneck is readiness.

“Across the Middle East, we are seeing increased momentum around AI, driven by national strategies, digital government programmes and large-scale enterprise transformation. Our findings show that ambition is not the challenge, readiness is.”

He adds what readiness actually requires:

“To sustain this pace, organisations in the region must ensure that governance, data management and skills development evolve just as quickly as AI adoption itself.”

And he makes the forward-looking point many leaders avoid saying out loud:

“Those that invest now in data trust and AI literacy will be far better positioned to turn innovation into lasting competitive advantage.”

Spend is rising, but vendor sprawl is becoming the next problem

Most organisations now recognise that AI is pushing them into overdue spending on data foundations. The vast majority (86%) expect investment in data management to increase in 2026. The top drivers include improving data privacy and security (43%), improving data and AI governance (41%), and improving data literacy or AI fluency (39%).

But there is a catch: the market response to weak foundations is often “buy more tools.” The survey shows organisations anticipate partnering with an average of eight separate vendors to support AI management priorities in 2026, most commonly to improve data trust.

There is also a strategic split emerging. Today, 54% plan to use vendor-supplied AI agents, while 44% expect to develop and manage them internally. That decision will shape how dependent companies remain on external platforms, and how much control they retain when the next wave of regulation and audits arrives.

Shawky’s message is that the spending needs direction, not just volume:

“The priority now is to ensure these investments are directed where they deliver the greatest impact. Strengthening data reliability, modernising governance, and embedding AI and data literacy across the workforce should all be top priority.”

What this means next: a divide between “AI everywhere” and “AI you can defend”

If 2024 and 2025 were about proving AI could be used, 2026 looks like the year organisations learn whether AI can be governed.

The likely outcome is a split.

One group will keep shipping pilots into production, celebrate adoption metrics, and hope the data gaps do not surface publicly. The other will treat data reliability, governance, and literacy as the real AI stack, and build systems they can explain, audit, and defend when something goes wrong.

In a world moving toward agentic systems, that second approach is not slower. It is the only approach that scales without breaking.