The trust infrastructure race: how Dataiku wants to make agentic AI shippable

AI is entering the part of the enterprise stack where mistakes become liabilities: customer decisions, credit outcomes, insurance coverage, fraud calls, and public-sector eligibility. In that world, “cool demo” is not a milestone. Auditability is. If an AI system can’t be traced, explained, or switched off when it drifts, it doesn’t scale, no matter how good the model looks in a lab.

Kurt Muehmel, Head of AI Strategy at Dataiku, describes the industry’s state bluntly: “What we are seeing today are pilot deployments. The fact that there is no full traceability or explainability is a major reason there is still limited deployment to production. If I do not fully understand the system, I do not feel comfortable deploying it.”

That’s why the most important fight in enterprise AI right now isn’t model quality. It’s trust infrastructure: governance, traceability, and operational control. When AI systems move from “assistant” to “agent”, taking actions, triggering workflows, and touching customers, the risk stops being theoretical. It becomes contractual.

Muehmel offers a simple scenario that shows how quickly things can go sideways: “A hypothetical example is an insurance company using AI to help customers look up information in long policy documents. If the AI starts looking at the wrong source of data, such as outdated policy documents, customers could make decisions based on incorrect information and the insurer could be liable.”

The data leaders’ confession: we’re deploying what we can’t defend

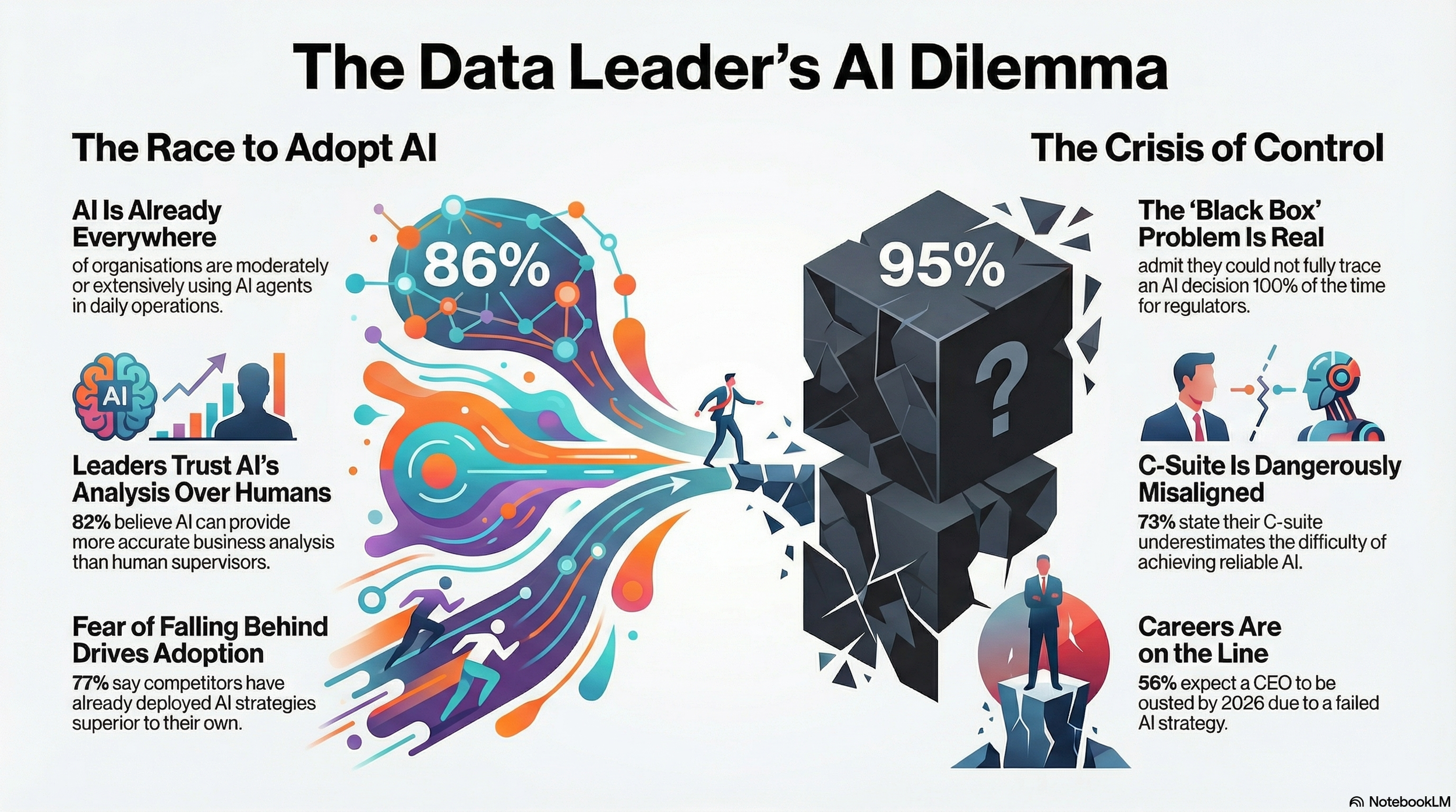

Dataiku’s global survey of 812 senior “Data Leaders” across the US, UK, France, Germany, the UAE, and APAC markets (Japan, Singapore, South Korea) reads less like a victory lap and more like a warning memo. Only 19% say they always require AI to “show their work” before approving production use. Nearly all (95%) don’t think they could trace an AI decision for regulators 100% of the time.

Over half (52%) have delayed or blocked an AI agent deployment due to explainability concerns. And 59% report real-world business issues caused by hallucinations or inaccuracies this year.

There’s an important caveat baked into the methodology: the study targets an “elite/hard-to-reach audience” and the raw data are “unweighted,” so the results are only representative of those surveyed. Still, the pattern is coherent. Companies aren’t struggling to start with AI. They’re struggling to stand behind it.

Why governance is suddenly a revenue line, not a compliance chore

The survey’s most telling section isn’t even about models. It’s about blame, pressure, and organisational incentives, because the people who sign off on AI don’t get rewarded like innovators. They get punished like risk officers.

The report says CIOs and CDOs are most likely to be credited for AI gains (46%) and even more likely to be blamed for AI-related business losses (56%). More starkly, 56% expect a CEO to be ousted by 2026 due to a failed AI strategy or malfunction, and 60% believe their own role is at risk if AI doesn’t deliver measurable gains within 1–2 years.

At the same time, leaders feel squeezed by competition.

Close to 77% say competitors have deployed AI strategies superior to their own, while 41% suspect at least half of employees are using generative AI tools without the company’s notice or permission. The result is predictable: a rush to deploy, paired with a growing fear of what those deployments might do.

Muehmel’s framing is that governance is the thing that makes production possible, not the thing that slows it down: “Exactly. That is why we build governance into every step in the process rather than applying a thin layer at the end.”

The platform pivot: from “AI pilots” to “AI factories”

This is the context for Dataiku’s AI Factory Accelerator, announced in Dubai on November 5, 2025. The company’s pitch is explicit: “The Dataiku AI Factory Accelerator combines Dataiku’s governed platform with NVIDIA accelerated computing to help enterprises turn AI pilots into production results at scale and across industries.”

Under the hood, the press release points to two motivations enterprises will recognise immediately: speed and control. Dataiku describes an optimisation of NVIDIA AI infrastructure (including NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs and NVIDIA networking) and integration with NVIDIA AI Enterprise software, including NVIDIA NIM microservices and NVIDIA CUDA-X Data Science libraries, inside Dataiku’s no-code to full-code environment.

Muehmel positions the partnership less as “more compute” and more as a bridge between infrastructure and the teams expected to deliver outcomes: “NVIDIA provides strong capabilities for running AI models efficiently in production. Combining that computational horsepower with the ability for Dataiku’s universal AI platform to connect business experts and technology experts, and to provide governance, bridges the gap between the potential of powerful GPUs and the reality of business teams that are removed from that infrastructure.”

That’s the bet behind the “factory” metaphor: repeatable patterns, governance embedded early, and fewer heroic one-off deployments that collapse during audit.

Why financial services first, and government right behind it

The first deployment focus is on financial services. Dataiku says the Accelerator “empowers banks, insurers, and asset managers” to deliver “trusted, production-grade generative and agentic AI” for risk modelling, fraud detection, and customer personalisation, building on its Financial Services Blueprint. It’s also supported in the NVIDIA AI Factory for Government reference design to “speed public sector AI adoption.”

This sequencing makes market sense. Banks and insurers buy AI. They also buy scrutiny. Governments buy AI. They buy even more scrutiny. If you can ship in those environments, you’ve earned the right to talk about “trusted production AI” in everyone else’s.

And in regulated industries, Dataiku leans hard on a sovereignty argument, less ideology, more control surface: “For regulated industries, it makes the path to production much shorter because organisations have full control over the hardware and software. They do not need to worry about sending something to a third party. Dataiku provides ease of use and speed of development on top of that fully managed, sovereign capability.”

The uncomfortable truth about LLMs: you can’t explain the model, so you must explain the system

A lot of enterprise marketing implies that “better models” will dissolve governance problems. In practice, LLM opacity is not a bug you patch. It’s a constraint you design around.

Muehmel is direct: “There are limits because the LLM itself is not explainable in the strict sense. We cannot say exactly why one input led to one output.” The workable compromise is transparency around the workflow: inputs, prompts, outputs, and downstream use.

“But in some cases that is acceptable if it is only one step in a broader process and the overall system is transparent. If you know what went in, what the request was, what came out, and how it is used, you can decide whether you accept that lack of explainability for that step within a given use case.”

When systems get more autonomous, the “kill switch” stops being a metaphor and becomes a requirement: “It is essential that organisations can turn off these systems at any time if necessary. We also see designs where systems are taken offline automatically if performance goes far out of bounds and creates significant liability or danger.”

Kurt Muehmel, Head of AI Strategy at Dataiku

The biggest reason enterprises are moving cautiously isn’t fear of the future. It’s fear of the contract they’ve already signed. “Where things are today is that the enterprise providing the final service, such as the bank, is responsible. This is one reason banks and other enterprises are moving relatively slowly: they are carrying the liability. AI providers are often disclaiming responsibility in contracts, and enterprises need to review those contracts very carefully.”

That line explains the market’s shape: pilots proliferate, but production roll-outs narrow to low-blast-radius workflows until traceability, monitoring, and rollbacks are real, not promised.

The winners won’t be the loudest “agentic” teams; they’ll be the most governable

Dataiku’s AI Factory Accelerator lands in a market where “agentic AI” is colliding with the oldest enterprise constraint of all: accountability. The platform narrative is getting louder, not because companies love platforms, but because platforms are where you can enforce the controls that determine whether AI expands or gets quarantined.

The honest bet isn’t perfect explainability. It is a bounded opacity. It is infrastructure for trust, especially in regulated industries where sovereignty and auditability shape what can be deployed at all. “Putting governance in place is like paving a highway. It takes effort upfront, but then you can move quickly and reliably, rather than stumbling your way through deployment.”

The market test for “AI factory” promises is simple: not how fast you can demo an agent, but how fast you can ship one with traceability, monitoring, and an exit hatch. And in the end, adoption will still hinge on measurable outcomes, not productivity folklore. “Where organisations succeed in proving AI is delivering is when they can point to very specific use cases where a core process changed, and the business impact is measurable: more revenue generated or losses reduced. Without that level of specificity, ‘everyone is a bit more efficient’ will not cover it.”

The next wave of enterprise AI won’t be won by intelligence alone. It will be won by governed intelligence, systems you can trace, justify, and shut down before they become tomorrow’s headline.