Why Nvidia Spent 2025 Buying Control of the AI Economy

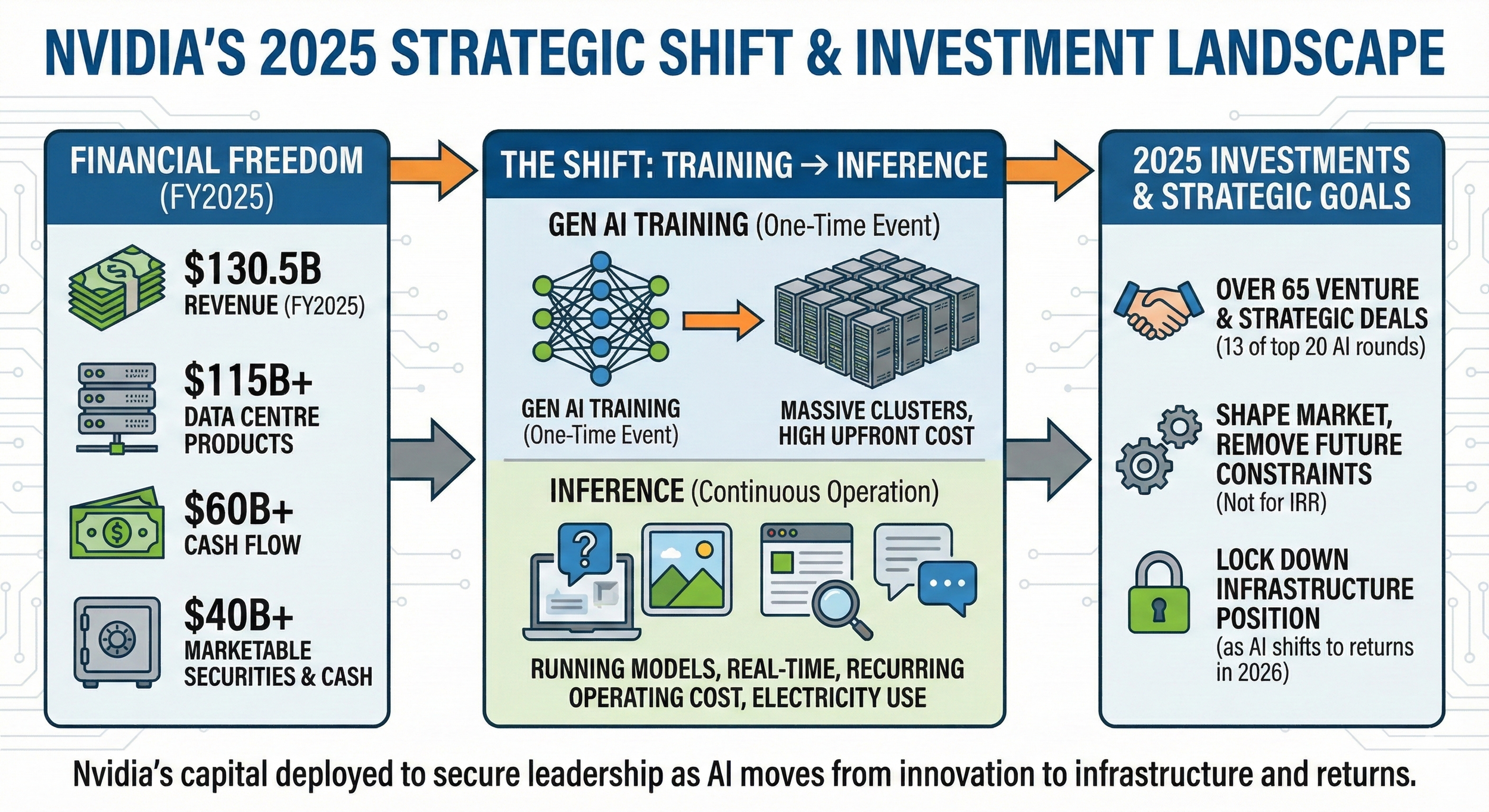

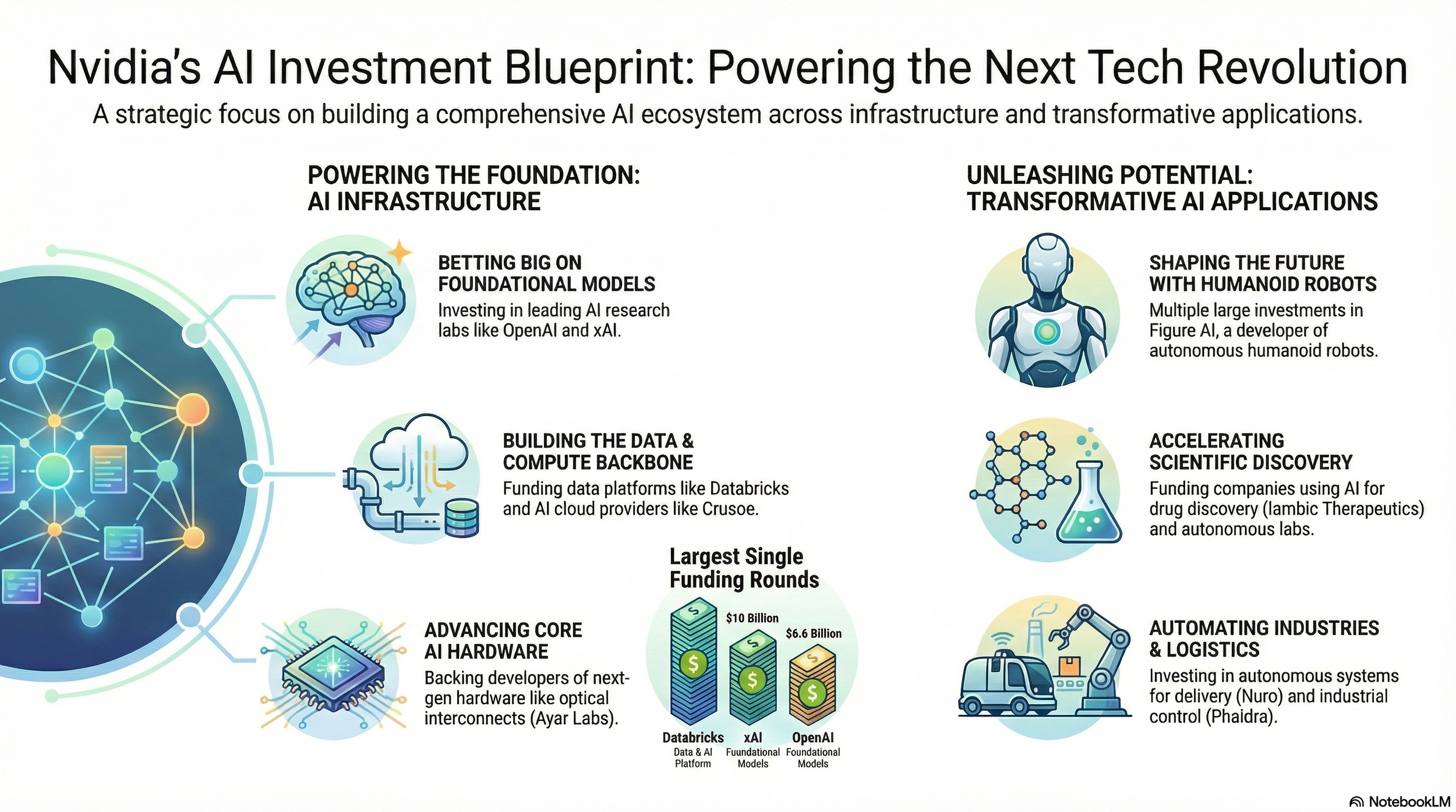

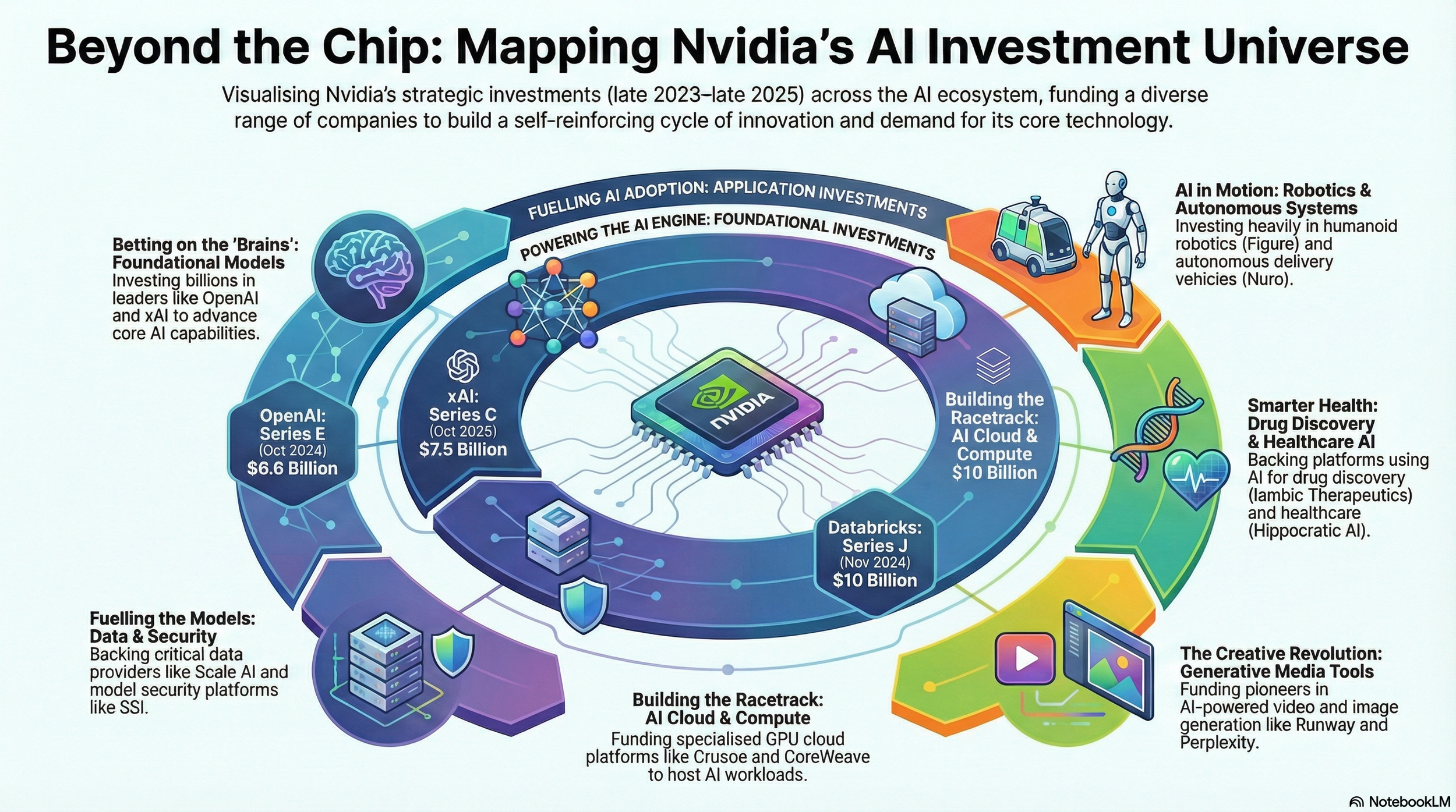

In 2025, Nvidia participated in over 65 venture and strategic deals. This includes direct corporate investments and activity through its NVentures arm. By Christmas of 2025, Nvidia had established itself at the "top rung" of corporate venture capital (CVC), participating in 13 of the year’s 20 largest AI funding rounds.

The chipmaker giant’s financial position gave it unusual freedom. In the fiscal year ending 2025, the company reported $130.5 billion in revenue, with over $115 billion from its data centre products, and cash flow exceeding $60 billion.

When 2025 ended, Nvidia held more than $40 billion in marketable securities and cash. This kind of balance sheet provided a financial cushion that allowed the chipmaker to deploy capital strategically rather than financially. Thus, none of its 2025 investments is designed to maximise internal return rate (IRR). These investments were used to shape the market by removing future constraints.

To understand this, let us take a step back. By the beginning of 2025, Nvidia had already achieved what most technology companies spend decades chasing: the chipmaker powered the majority of advanced AI systems. Apart from this, Nvidia’s software stack becomes the default layer for developers.

Historically, once technology shifts from innovation to infrastructure, leadership is no longer secured by being faster or better; it is secured by controlling cost, access, distribution, power, and compatibility. Each of Nvidia’s 2025 investments is a strategic effort to lock down its position, as AI in 2026 will shift towards returns.

This is how Nvidia organised its capital across distinct sectors, why each sector mattered, and how every major 2025 investment fits into that logic.

The shift that forced Nvidia’s hand: inference replaces training

For the first year of generative AI, the focus was on training, which is a big, one-time event. For training these models, companies build massive clusters, run them for weeks or months, spend a lot of money upfront, and then stop. While expensive and dramatic, these projects don’t happen all the time, as training is about pushing machines as hard as possible for a limited period of time.

During this time, Nvidia’s general-purpose GPUs were effective, as they could handle many different kinds of heavy calculations at high speed. Things started changing towards the middle of 2024 and 2025. The job now moved from training into something bigger – inference. It was about running these models – answering questions, generating images, ranking search results, handling customer chats and making decisions in real-time.

The total amount of computing used for inference soon became larger than the amount used for training. Inference behaves differently from training – it runs all the time. Every second inference run, it consumes electricity. Delays here show up in what the user sees. Since inference runs all the time, costs repeat every day. Those costs show up in operating budgets, not as one-off investments.

Once this happens, the attention internally expands from just engineers and research teams to finance teams, as it affects monthly expenses and profit margins. This means questions like – can we do this cheaper? Can we run it closer to where the data is created? Do we really need the most powerful hardware for every task? These are questions that get asked.

A major part of Nvidia’s 2025 investment decisions is an early response to this thinking shift. The company is now focused on a world that runs AI, not builds it, and this turns into an economic problem.

Sector one: inference hardware and architecture

The most consequential move of the year came at the end of 2025, with Nvidia’s reported approximately $20 billion transaction involving the inference IP and engineering talent of Groq. This company isn’t a training competition; its architecture has been designed around Inference: predictable latency, consistent throughput, and lower power per query.

Those characteristics matter far more in production environments than in research settings.

Once inference dominates, buyers stop optimising for flexibility and start optimising for economics. This is the stage at which infrastructure markets typically unbundle, with cheaper, specialised solutions eroding the margins of premium general-purpose platforms.

Nvidia’s Groq move was not about near-term revenue. It was about preventing inference from becoming the layer where Nvidia’s pricing power collapses. By internalising inference-optimised architecture, Nvidia ensured that efficiency improvements would remain part of its own stack rather than becoming an external competitive wedge.

The size of the transaction reflects the risk Nvidia was addressing, not the revenue Groq generated.

Sector two: compute ownership and AI factories

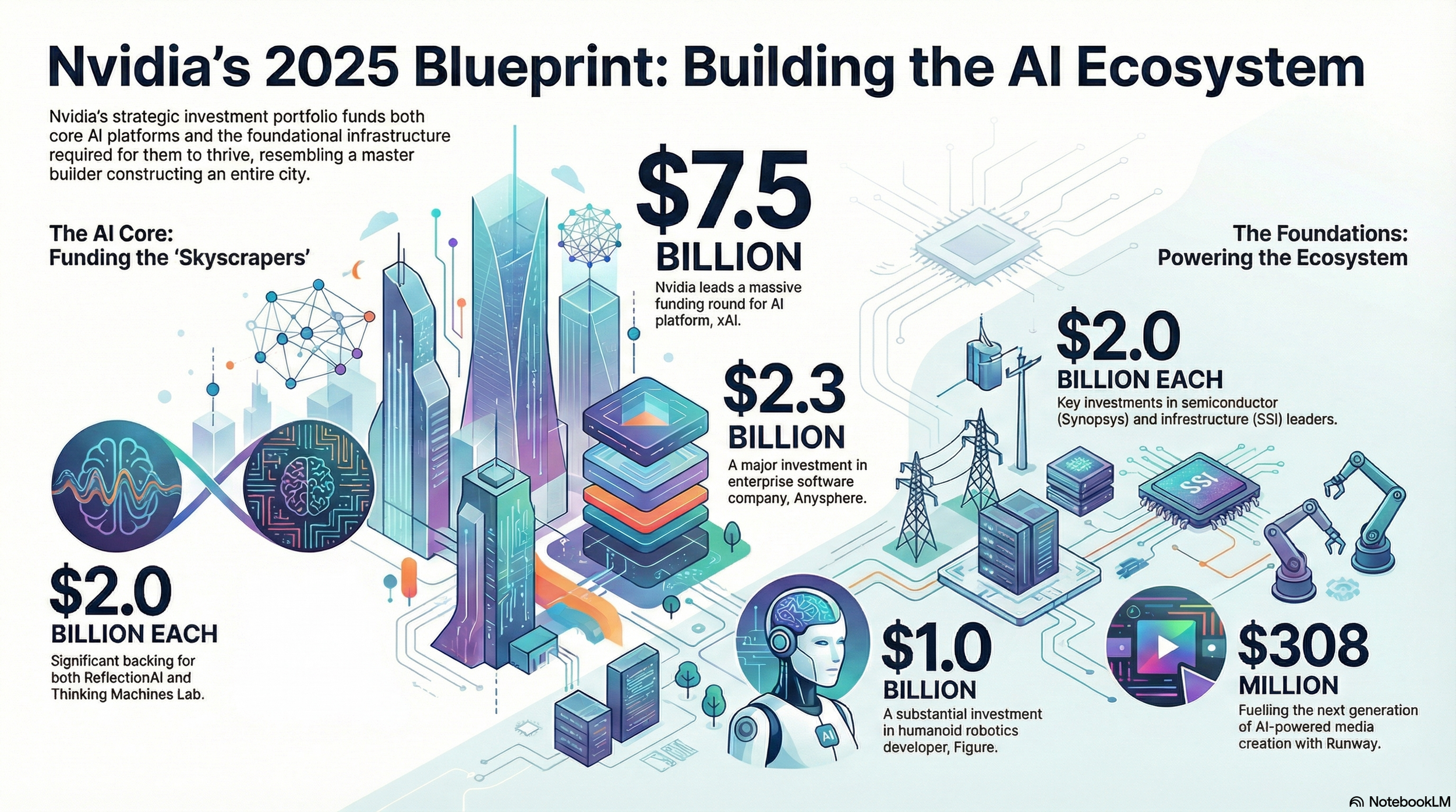

Protecting inference performance is not enough if Nvidia loses control over where inference runs. This explains Nvidia’s repeated investments in companies that own and operate compute capacity, rather than simply consuming it. In 2025, Nvidia participated in major rounds for Together AI ($305 million), Lambda ($480 million), Crusoe ($1.4 billion), and Firmus Technologies ($330 million).

These companies form an alternative layer of infrastructure ownership. They convert electricity and capital into inference services without forcing Nvidia to carry the balance-sheet risk of building data centres itself.

The strategic importance is straightforward. If hyperscalers were the only entities capable of running large-scale inference, they would eventually dictate pricing, architecture, and supply terms. By helping capitalise independent “AI factories,” Nvidia broadens the capacity market while keeping its hardware and software at the centre of it.

This is supply-side risk management, not diversification.

Sector three: frontier model labs and sovereign AI

Nvidia’s investments in frontier model developers are often described as backing innovation. In reality, they are about managing customer power. Frontier model developers are the companies building the largest, most advanced AI models that push the limits of current computing power — and in doing so, consume massive amounts of GPU compute and influence the entire AI ecosystem.

In 2025, Nvidia participated in large rounds for Mistral AI (€1.7 billion), Cohere ($500 million), Thinking Machines Lab ($2 billion seed), Reflection AI ($2 billion), and Reka AI ($110 million).

If inference demand concentrates in the hands of two or three dominant labs, those buyers gain leverage over hardware suppliers. They negotiate aggressively. They push for custom silicon. They influence roadmaps.

Nvidia’s response has been to ensure that demand remains fragmented across many well-funded, compute-hungry organisations, including “sovereign AI” efforts outside the US. Capital flows out of Nvidia and returns via GPU purchases, leases, and long-term infrastructure commitments. It attracts regulatory attention, but it stabilises Nvidia’s position at the centre of the ecosystem.

Sector four: enterprise and applied AI

While frontier labs create scale, enterprise deployments create durability. In 2025, Nvidia-backed companies embedded AI into operational workflows, including Runway ($308 million), Uniphore ($260 million), and Hippocratic AI ($141 million).

These are not fast-moving consumer plays; they operate in regulated, politically complex environments where adoption is slow but sticky. For example, once inference becomes part of a healthcare workflow, a customer-support system, or a media production pipeline, it becomes recurring operational spend rather than discretionary experimentation. For Nvidia, this matters because it smooths demand across cycles. Enterprise inference does not disappear when hype fades.

Sector five: telecom, edge compute, and national infrastructure

If Groq addressed how inference runs, Nokia addressed where it runs next. In late 2025, Nvidia committed $1 billion to Nokia, acquiring roughly a 3% stake and deepening a partnership focused on AI-native telecom infrastructure, particularly AI-RAN.

Inference is moving outward from hyperscale data centres. Factories, ports, hospitals, autonomous systems, and cities require low-latency processing close to where data is generated. Telecom networks are the natural execution layer.

Nokia already operates inside a regulated national infrastructure, with carrier relationships and upgrade cycles measured in decades. By investing directly, Nvidia embeds itself into an environment where switching suppliers is difficult and displacement is slow.

Industry projections cited alongside the partnership estimate the cumulative AI-RAN opportunity at more than $200 billion by 2030. Nvidia chose equity because it wants to be structurally embedded, not merely a vendor.

Sector six: energy, power, and materials

The final cluster of Nvidia’s 2025 investments acknowledges a constraint that no amount of software optimisation can remove. AI scaling is colliding with power limits.

In 2025, Nvidia participated in an $863 million round for Commonwealth Fusion Systems, invested $350 million in Redwood Materials, and also provided funding for TerraPower.

These investments are not speculative side bets. They reflect the reality that data-centre growth is increasingly constrained by grid capacity, cooling, and storage. Power availability will determine how far inference can scale. Nvidia is not trying to become an energy company. It is trying to ensure that energy constraints do not become someone else’s leverage over its future.

What the full picture reveals

When Nvidia’s 2025 investments are viewed together, they stop looking like a series of disconnected strategic bets and start looking like a company responding to a very specific problem: how to remain central once AI becomes infrastructure rather than innovation.

Each category of investment addresses a different point where Nvidia’s influence could weaken over time.

The inference hardware moves reflect concern that the economics of running AI — not training it — will determine long-term margins. The compute infrastructure investments are aimed at preventing a small number of cloud providers from becoming gatekeepers with the power to dictate pricing and architectural choices.

The funding of frontier and sovereign model labs reduces the risk that demand concentrates in the hands of a few customers capable of exerting pressure on Nvidia’s roadmap. Enterprise AI investments anchor inference usage inside slow-moving, regulated organisations where workloads persist regardless of hype cycles.

The telecom and edge investments acknowledge that AI execution is spreading beyond centralised data centres into networks and national infrastructure. The energy and materials investments recognise that power availability, not software innovation, is becoming the limiting factor for further scale.

None of these moves make much sense in isolation. Together, they describe a company that is no longer optimising for product leadership alone, but for control over the conditions that determine how, where, and at what cost AI is deployed.

This is why Nvidia’s 2025 capital allocation looks unusual compared with traditional corporate venture activity. The company is not primarily seeking financial upside from individual investments. It is attempting to shape the structure of the market it depends on, reducing the number of ways customers, partners, or regulators could eventually force it into a weaker position.

Seen this way, Nvidia’s spending only appears excessive if one assumes that the next phase of AI will resemble the last — a sequence of training races won by faster chips. Nvidia’s behaviour suggests it expects something else: a slower, more regulated, more cost-sensitive phase in which control over infrastructure matters more than raw performance.